In my previous post (here), I wrote about obtaining a confidence interval for the estimate of an interaction contrast. I demonstrated, for a simple two-way independent factorial design, how to obtain a confidence interval by making use of the information in an ANOVA source table and estimates of the marginal means and how a custom contrast estimate can be obtained with SPSS.

One of the results of the analysis in the previous post was that the 95% confidence interval for the interaction was very wide. The estimate was .77, 95% CI [0.04, 1.49]. Suppose that it is theoretically or practically important to know the value of the contrast to a more precise degree. (I.e. some researchers will be content that the CI allows for a directional qualitative interpretation: there seems to exist a positive interaction effect, but others, more interested in the quantitative questions may not be so easily satisfied). Let’s see how we can plan the research to obtain a more precise estimate. In other words, let’s plan for precision.

Of course, there are several ways in which the precision of the estimate can be increased. For instance, by using measurement procedures that are designed to obtain reliable data, we could change the experimental design, for example switching to a repeated measures (crossed) design, and/or increase the number of observations. An example of the latter would be to increase the number of participants and/or the number of observations per participant. We will only consider the option of increasing the number of participants, and keep the independent factorial design, although in reality we would of course also strive for a measurement instrument that generally gives us highly reliable data. (By the way, it is possible to use my Precision application to investigate the effects of changing the experimental design on the expected precision of contrast estimates in studies with 1 fixed factor and 2 random factors).

The plan for the rest of this post is as follows. We will focus on getting a short confidence interval for our interaction estimate, and we will do that by considering the half-width of the interval, the Margin of Error (MOE). First we will try to find a sample size that gives us an expected MOE (in repeated replication of the experiment with new random samples) no more than a target MOE. Second, we will try to find a sample size that gives a MOE smaller than or equal to our target MOE in a specifiable percentage (say, 80% or 90%) of replication experiments. The latter approach is called planning with assurance.

Let us get back to some of the SPSS output we considered in the previous post to get the ingredients we need for sample size planning. First, the ANOVA table.

|

| Table 1. ANOVA source table |

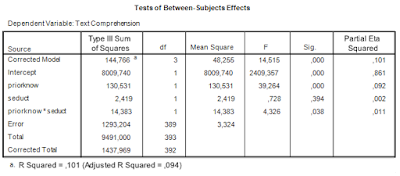

We are interested in estimating and optimizing the precision of an interaction contrast estimate. The first things we need are an expression of the error variance needed to calculate the standard error of the estimate and the degrees of freedom that were used in estimating the error variance. In general, the error variance needed is the same error variance you would use in performing an F-test for the specific effect, in this case the interaction effect.

Thus, we note the error variance used to test the interaction effect, i.e. mean square error, and the degrees of freedom. The value of mean square error is 3.324, and the degrees of freedom are 389. Note that this value is the total sample sizes minus the number of conditions (393 – 4 = 389), or, equivalently, the total sample sizes minus the degrees of freedom of the intercept, the main effects, and the interaction (393 – (1 + 1 + 1 + 1) = 389). I will call these degrees of freedom the error degrees of freedom, dfe.

MOE can be obtained by multiplying a critical t-value with the same degrees of freedom as the error degrees of freedom with the standard error of the estimate.

The standard error of the contrast estimate is

![]()

where ![]() is the contrast weight for the i-th condition mean, and

is the contrast weight for the i-th condition mean, and ![]() the number of observations (in our example participants) in treatment condition i. Note that

the number of observations (in our example participants) in treatment condition i. Note that ![]() is the variance of treatment mean i, the square root of which gives the familiar standard error of the mean.

is the variance of treatment mean i, the square root of which gives the familiar standard error of the mean.

The contrast weights we used to estimate the 2 x 2 interaction were {-1, 1, 1, -1}. So, the expression for MOE becomes

![]()

Thus, suppose we have the independent 2×2 factorial design, ![]() , and the true value of Mean Square Error is 3.324, then MOE for the contrast estimate equals

, and the true value of Mean Square Error is 3.324, then MOE for the contrast estimate equals

![]()

.

Note that this is the value of MOE we obtain on average in repeated replications with new samples, if we use sample sizes of 100 (total number of participants is 400) and if the true value of the error variance is 3.324. The value is close to the value we obtained in the previous post (MOE = 0.72) because the sample sizes were very close to 100 per group.

Now, we found the original confidence interval too wide, and we have just seen how 100 participants per group does not really help. MOE is only slightly smaller than our originally obtained MOE. We need to set a target MOE and then figure out how many participants we need to get that target MOE.

Intermezzo: Rules of thumb for target MOE

(Here are some updated rules of thumb: https://the-small-s-scientist.blogspot.com/2018/11/contrast-tutorial.html)

In the absence of theoretical or practical considerations about the precision we want, we may want to use rules of thumb. My (very first proposal for) rules of thumb are based on the default interpretations of Cohen’s d. Considering the absolute values of d ≤ .10 to be negligible d = .20 small, d = .50 medium and d = .80 large. (I really do not like rules-of-thumb, because using them is a sign that you are not thinking).

Now, suppose that we interpret the confidence interval as a range of plausible values for the true value of the effect size. It is not at all clear to me what such a supposition entails, but let’s simply take it for granted right now (please don’t). Then, I think it is reasonable to say that being able to distinguish between small and negligible effects sizes is relatively precise. Thus a MOE of .05 (pooled) standard deviations can be considered precise because (on average) the 95% CI for the small effect sizes is [.15, .25], assuming we know the value of the standard deviation, so negligible effects will not be deemed plausible values on average, since effect sizes smaller than .10 are outside the interval.

By essentially the same reasoning. if we cannot distinguish between large and negligible effects, we are not estimating things very precisely. Therefore, a MOE of .80 standard deviations can be considered to be not very precise. On average, the CI for an existing large effect, will be [0, 1.60], so it includes both negligible and very large effects as plausible values.

For medium (does it make sense to speak of medium precision?) precision I would like to suggest .20-.25 standard deviations. On average, with this value for MOE, if there is a medium effect, small effects and large effects are relatively implausible. In the case of small effects, medium precision entails that on average both effects in the opposite direction and medium effects are among the plausible values.

Of course, I am interpreting the d-values as strict boundaries, but the scale is not categorical, but continuous. So instead of small, large effect sizes, it’s better to speak of smallish and largish effect sizes. And as soon as I find a variant for medium effects sizes I will also include that term in the list.

Note: sample size planning may indicate that precision of MOE = .20-.25 standard deviations is unattainable. In that case, we will simply have to accept that our precision does not lead to confident conclusions about the population effect size. (Once I showed one of my colleagues my precision app, during which he said: “that amount of precision requires a very large sample. I do not like your ideas about sample size planning”).

(By the way, I am also considering rules-of-thumb for target MOE that include assurance. Something like: high precision is when repeated experiments have a high probability of distinguishing small and negligible effects; in that case the average MOE will be smaller than .05).

Planning for precision

![]()

where ![]() , since we are considering the 2×2 design.

, since we are considering the 2×2 design.

![]()

First we create a function to calculate MOE:

MOE = function(n) {

MOE = 2*qt(.975, 4*(n - 1))*sqrt(3.324/n)

}

Next, we will define a loss function and use R’s built-in optimize function to determine the sample size. Note that the loss-function calculates the squared difference between MOE based on a sample size n and our target MOE. The optimize function minimizes that squared difference in terms of sample size n (starting with n = 100 and stopping at n = 1000).

loss <- function(n) {

(MOE(n) - 0.4558)^2

}

optimize(loss, c(100, 1000))

## $minimum ## [1] 246.4563 ## ## $objective ## [1] 8.591375e-18

Thus, according to the optimize function we need 247 participants (per group; total N = 988), to get an expected MOE equal to our target MOE. The expected MOE equals 0.4553, which you can confirm by using the MOE function we made above.

Planning with assurance

![]()

where ![]() is the assurance expressed in a probability between 0 and 1.

is the assurance expressed in a probability between 0 and 1.

MOE.gamma = function(n) {

df = 4*(n-1)

MOE = 2*qt(.975, df)*sqrt(3.324/n*qchisq(.80, df)/df)

}

loss <- function(n) {

(MOE.gamma(n) - 0.4558)^2

}

optimize(loss, c(100, 1000))

## $minimum ## [1] 255.576 ## ## $objective ## [1] 2.900716e-18

Thus, according to the results, we need 256 persons per group (N = 1024 in total) to have a 80% probability of obtaining a MOE not larger than our target MOE. In that case, our expected MOE will be 0.4472.