This is the third post about the Planning for Precision app (in the future I’ll explain the difference between Planning for Precision and Precision for Planning). Some background information about the application can be found here: http://the-small-s-scientist.blogspot.com/2017/04/planning-for-precision.html.

In this post, I want to present the simulation results for 4 designs with 4 conditions. The designs are: the counter balanced design (see previous post), the fully-crossed design, the stimulus-within-condition design, and the stimulus-and-participant-within-condition design (the both-within-condition design). I have not included the participants-within-condition design, because this is simply the mirror-image (so to say) of the stimulus-within-condition design.

In one of my next posts, I will describe some more background information about planning for precision, but some of the basics are as follows. We have a design with 4 treatment conditions, and what we want do is to estimate differences between these condition means by using contrasts. For instance, we may be interested in the (amount of) difference between the first mean, maybe because it is a control-condition with the average of the other three conditions: μ1 – (μ2 + μ3 + μ4)/3 = 1*μ1 – 1/3*μ2 -1/3*μ3 – 1/3*μ4. The values {1, -1/3, -1/3, -1/3} are the contrast weights, and for the result we use the term ψ.

The value of ψ is estimated on the basis of estimates of the population means, that is, the sample means or condition means. Due to sampling error, the contrast estimate varies from sample to sample and the amount of sampling error can be expressed by means of a confidence interval. Conceptually, the confidence interval expresses the precision of the estimate: the wider the confidence interval, the less precise the estimate is.

The Margin of Error (MOE) of an estimate is the half-width of the confidence interval, so the confidence interval is the estimate plus or minus MOE. We will take MOE as an expression of the precision of the estimate (the less the value of MOE the more precise the estimate). Now, if you want to estimate an effect size, more precision (lower value of MOE; less wide confidence interval) is better than less precision (higher value of MOE; wider confidence interval). The app let’s you specify the design and the contrast weights and helps you find the minimum required sample sizes (for participants and stimuli) for a given target MOE. (You can also play with the designs to see which design gives you smallest expected MOE).

Crucially, if you plan for precision, you also want to have some assurance that the MOE you are likely to obtain in you actual experiment will not be larger than you target MOE. Compare this with power: 80% power means that the probability that you will reject the null-hypothesis is 80%. Likewise, assurance MOE of 80% means that there is an 80% probability that your obtained MOE will be no larger than assurance MOE.

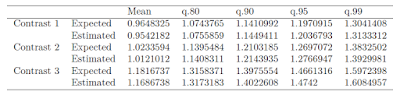

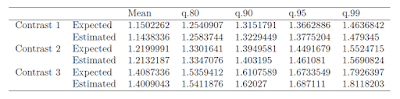

The simulations (with N = 10000 replications) estimate Expected MOE as well as Assurance MOE for assurances of .80, .90, .95, and .99, for 4 designs with 4 treatment conditions, with a total number of 48 participants and 24 stimuli (items). The MOEs are given for three standard constrasts: 1) the difference between the first mean and the mean of the other three, with weights {1, -1/3, -1/3, -1/3}; 2) the difference between the second mean and the mean of conditions three and four, with weights {0, 1, -1/2, -1/2}; 3) the difference between the third and fourth condition means, with weights {0, 0, 1, -1}.

I will present the results in separate tables for the 4 designs considered and include percentage difference between expected values of assurance MOE and the estimated values estimated values.

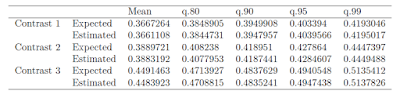

The fully crossed design

The results are in the following table.

The percentage difference between the expected quantiles (= assurance MOEs for given insurance; i.e. q.80 is expected or estimated 80% Assurance MOE) and the estimated quantiles are: .80: 0.11%; .90: 0.05%; .95: -0.14%; 99: -0.05%.

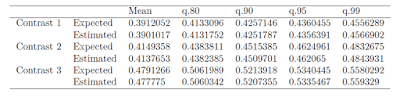

The counter balanced design

The percentage difference between the expected quantiles and the estimated quantiles are: .80: 0.03%; .90: 0.13%; .95: 0.09%, .99: -0.23%.