I think trying to be scientific with a small s involves asking critical questions about common wisdom or common practice. In this post, I would like to focus on multiple comparisons in the context of ANOVA. What does common practice indicate?

Common wisdom suggests doing multiple comparisons only if the F-test is significant

“One way to begin an ANOVA is to run a general omnibus test. The advantage to starting here is that if the omnibus test comes up insignificant, you can stop your analysis and deem all pairwise comparisons insignificant. If the omnibus test is significant, you should continue with pairwise comparisons” (https://www.r-bloggers.com/r-tutorial-series-one-way-anova-with-pairwise-comparisons/)

“When we have a statistically significant effect in ANOVA and an independent variable of more than two levels, we typically want to make follow-up comparisons. There are numerous methods for making pairwise comparisons and this tutorial will demonstrate how to execute several different techniques in R.” (https://www.r-bloggers.com/r-tutorial-series-anova-pairwise-comparison-methods/)

And have a look at how the text book I used to use in my statistics course explains it.

“It might seem a bit unhelpful that an ANOVA doesn’t tell you which groups are different from which, given that having gone to the trouble of running an experiment, you probably need to know more than ‘there’s some difference somewhere or other’. You might wonder, therefore, why we don’t just carry out a lot of t-tests, which would tell us very specifically whether pairs of group means differ. Actually, the reason has already been explained in Section 2.1.6.7: every time you run multiple tests on the same data you inflate the potential Type I errors that you make. However, we’ll return to this point in Section 11.5 when we look at how we follow up an ANOVA to discover where the group difference lie.” (Field, 2015, p. 442).

Although, in honesty, on p. 459 Field writes:

“The least significance difference (LSD) pairwise comparison makes no attempt to control Type I error and is equivalent to performing multiple t-tests on the data. The only difference is that LSD requires the overall ANOVA to be significant.”

This is meant to inform about the relative merits of one post hoc procedure to another in terms of Type I and Type II error. Crucially, it is not mentioned that the other post-hoc procedures require that the overall ANOVA be significant. (As common wisdom seems to suggest). However, his flow-chart of the ANOVA procedure (p. 460) clearly suggests multiple comparison procedures should be used as post-hoc procedures (after the ANOVA is significant).

Thus, common “statistical” wisdom seem to suggest that multiple comparison procedures are to be used as post hoc procedures following up a significant omnibus F-test. And the reason is that this two-stepped procedure minimizes the probability of type I errors.

Now, let’s ask ourselves whether this common sense is, well, sensible.

Multiple comparisons only after significant F-test affects power negatively

Wilcox (2017) contains some useful information regarding our question. In his discussion of the much used Tukey-HSD procedure (the Tukey-Kramer Method), he references Bernhardson, (1975) who shows that the probability of at least 1 type I error among pairwise comparisons of estimates of equal population means (i.e. true null-hypotheses) is no longer equal to ![]() if the procedure is only carried out following a significant omnibus test. That is, if we use our beloved two step procedure.

if the procedure is only carried out following a significant omnibus test. That is, if we use our beloved two step procedure.

The consequence of the two step procedure for the Tukey-HSD is that ![]() is reduced. Thus, if we want our multiple comparisons procedure to generate one type I error or more at most with a probability of

is reduced. Thus, if we want our multiple comparisons procedure to generate one type I error or more at most with a probability of ![]() , using the 2 step procedure leads to a lowered

, using the 2 step procedure leads to a lowered ![]() . This is of course, bad news, because in the event that not all of the null-hypotheses are true, lowering

. This is of course, bad news, because in the event that not all of the null-hypotheses are true, lowering ![]() increases

increases ![]() , the probability of not rejecting when the null-hypothesis is false (keeping the sample size constant, of course). In other words, the two step procedure decreases the power of the multiple comparison procedure.

, the probability of not rejecting when the null-hypothesis is false (keeping the sample size constant, of course). In other words, the two step procedure decreases the power of the multiple comparison procedure.

In the words of Wilcox (2017):

“In practical terms, when it comes to controlling the probability of at least one type I error, there is no need to first reject with the ANOVA F test to justify using the Tukey-Kramer method. If the Tukey-Kramer method is used only after the F test rejects, power can be reduced. Currently, however, common practice is to use the Tukey-Kramer method only if the F-test rejects. That is, the insight reported by Bernhardson is not yet well known.” (p. 385).

In conceptual terms, the fact that the probability of at least one type I error in the multiple comparison procedure is smaller than ![]() if the F-test rejects is pretty clear, at least to me it is. Suppose we reject if the p-value of the F-test is smaller or equal to 5%. This will also be the probability that we conduct the multiple comparison test over repeated replications of the same experiment. Of that 5%, not every application of the procedure will result in at least one type I error. Indeed, a puzzling fact for many beginning researchers is that the F-test is significant while none of the pairwise comparisons is. In other words, some of those 5% of the cases in which we perform the procedure following a significant F-test will probably not reject any of the pairwise null-hypotheses, unless it is guaranteed that at least one type I error per application will be made.

if the F-test rejects is pretty clear, at least to me it is. Suppose we reject if the p-value of the F-test is smaller or equal to 5%. This will also be the probability that we conduct the multiple comparison test over repeated replications of the same experiment. Of that 5%, not every application of the procedure will result in at least one type I error. Indeed, a puzzling fact for many beginning researchers is that the F-test is significant while none of the pairwise comparisons is. In other words, some of those 5% of the cases in which we perform the procedure following a significant F-test will probably not reject any of the pairwise null-hypotheses, unless it is guaranteed that at least one type I error per application will be made.

(With no adjustment of ![]() for multiple comparisons, this will happen (with high probability so no guarantee) if a huge number of pairwise comparisons are made. For instance, with 99 unadjusted multiple comparisons the probability of at least one type I error is 99%.; this is why it makes sense to demand that the F-test is significant before testing multiple comparisons with the LSD procedure. Although the latter seems to run into trouble with more than 3 groups (Wilcox, 2017).

for multiple comparisons, this will happen (with high probability so no guarantee) if a huge number of pairwise comparisons are made. For instance, with 99 unadjusted multiple comparisons the probability of at least one type I error is 99%.; this is why it makes sense to demand that the F-test is significant before testing multiple comparisons with the LSD procedure. Although the latter seems to run into trouble with more than 3 groups (Wilcox, 2017).

A quick simulation study

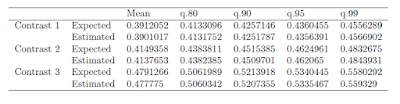

My hunch is that the two-step procedure is unnecessary for the Tukey-Kramer method as well as for other multiple comparison procedures (the exception Fisher’s LSD procedure which was designed as a post hoc procedure to be used as a follow up after a significant F-test, as Field (2015) rightly points out), but I only focused on the Tukey-Kramer method. What I did was a simple simulation study with a four group between subjects design (all ![]() ‘s equal) and estimated the probability of at least type I error both with and without using the 2 step procedure.

‘s equal) and estimated the probability of at least type I error both with and without using the 2 step procedure.

set.seed(456)

#number of groups

ngr = 4

#number of participants

n = 40

#group is a factor

gr <- factor(rep(1:ngr, each=n))

#vector for storing rejections F-test

Reject <- rep(0, 10000)

#vector for storing #rejections multiple

#comparisons

RejectHSD <- rep(0, 10000)

for (i in 1:10000) {

y = rnorm(ngr*n)

mod = aov(y ~ gr)

Reject[i] = anova(mod)$"Pr(>F)"[1] <= .05

PS <- TukeyHSD(mod)$gr[,4]

RejectHSD[i] = sum(PS <=.05)

}

#probability type I error F-test

sum(Reject)/length(Reject)

## [1] 0.0515

#probability at least one type I error Tukey HSD sum(RejectHSD > 0) / length(RejectHSD)

## [1] 0.0503

#probability at least one type I error given F-tests Rejects sum(RejectHSD[Reject==TRUE] > 0) / length(RejectHSD)

## [1] 0.0424

Even though a single (relatively tiny) simulation (which, by the way, takes a long time to run, nonetheless), is not necessarily convincing, it does illustrate the main points of this post. First, the probability of at least one incorrect rejection using the TukeyHSD function is close to .05. With this particular random seed it even performs a little better than the ANOVA F-test: .0503 versus .0515. This illustrates that even without considering whether the omnibus test is significant the main demand of not rejecting too many true null-hypotheses is completely satisfied. So, in practical terms, you can safely ignore the omnibus test if your concerns are about ![]() .

.

Second, the probability of incorrectly rejecting at least one true pair-wise null-hypothesis after the ANOVA F-test is significant is estimated to be .0424. This shows, that the two-step procedure leads to a larger decrease in the actual type I error probability than is wanted. Even though this may seem good news from the perspective of avoiding type I errors, the down side is that pair wise null-hypotheses that are false (and potentially important) may not be detected.