Here I would like to explain the procedure for sample size planning for one-way and two-way (factorial) between subjects designs. We will consider examples based on and described in Haans (2018).

The first example: one-way design

The first example considers the effect of seating location of students on their educational performance. Seating location is defined as distance from the teacher and operationalized in terms of the row the student is seated in, with first row being the closest to the teacher and the fourth row being the furthest away. 20 Students are randomly assigned to one of the four possible rows, so N = 20, n = 5. The dependent variable is the course grade of the student. (Note: the data and study are hypothetical).

As Haans (2018) explains, one psychological theory explaining the effect of seating position on educational performance is based on social influence. This theory posits that due to the social influence of the teacher, the students that are seated closest to the teacher find themselves in a state of undivided attention. This undivided attention causes their educational performance to be better than the students who are seated further away.

In operational terms, then, we may expect that first row students will have a better average grade than students seated on the other rows. So, the quantitative research question we are interested in is:

“How much do the average grades differ between students seated first row and the students seated on other rows?”

We can estimate this quantity with a Helmert Contrast, where we assign a contrast weight of 1 to mean of the first row grades and weights -1/3 to the means of the grades in the other rows.

Haans (2018) gives us the following results. The contrast estimate equals 2.00 , 95% CI [0.27, 3.73]. In order to interpret this more easily, we divide this estimate by the square root Mean Square Error, to obtain the standardized estimate and standardized confidence interval (not to be confused with the confidence interval of the standardized estimate, but that’s a different story. The result is: 1.26, 95% CI [0.17, 2.36].

To answer the research question, the estimated difference equals 1.26 standard deviations, which according to rule-of-thumbs frequently used in psychology is a large difference. The CI shows the enormous amount of uncertainty of this estimate: population values between 0.17 (small) and 2.36 (very large) are also consistent with the observed data and our statistical assumptions. So, it seems safe to conclude that it looks like there is a positive effect of seating position, but the wide range of the CI makes it clear that the data do not tell us enough about the size of the effect, the precision is simply too low.

The precision is f = 1.09, which according to my rules-of-thumb is very imprecise (I consider f = 0.65, to be barely tolerable).

So, let’s plan for a replication study with a reasonably precise estimate of f = 0.40, with 80% assurance. (Note: for some advice on setting target Moe: Planning with assurance, with assurance. ) I’ve used the app: https://gmulder.shinyapps.io/PlanningFactorialContrasts/ with the default values for a single factor between subjects design with 4 conditions. According to the app, we need n = 36 participants per condition (making a total of N = 144).

(For more detailed information considering sample size planning for contrast analysis see: http://small-s.science/?p=10 and for some guidelines for setting target MoE: http://small-s.science/?p=14)

The second example: factorial design

Our second example is also taken from Haans (2018). It considered the same phenomenon, the effect of students’ seating distance from the teacher and the educational performance of the students.

A second theory explaining the effect is that the effect is mainly caused by the teacher having decreased levels of eye contact with the students sitting farther to the back in the lecture hall.

To test that theory, a experiment was conducted with N = 72 participants attending a lecture. The lecture was given to two independent groups of 36 pariticpant. The first group attended the lecture while the teacher was wearing dark sunglasses, the second group attented the lecture while the teacher was not wearing sunglasses,. Again, all participants were randomly assigned to 1 of 4 possible rows. The dependent variable was the score on a 10-item questionnaire about the contents of the lecture.

Now, if the eyecontact of the teachter is the causal variable, we may expect that in this experimental setup the difference between the average score of the persons seated on the first row and the averages of the other rows will be smaller for the condition where the teacher wears sunglasses than for the condition in which the teacher does not wear these glasses, as wearing sunglasses prevents eye-contact between the teacher and the students. Our quantitative question is therefore:

“How much does the contrast between the first row and the others rows differ between the conditions with and without sunglasses?”

In other words, we are interested in the size of the interaction effect.

I’ve downloaded the dataset from http://pareonline.net/sup/v23n9.zip (between2by4data.sav) and specified the following syntax in SPSS:

UNIANOVA retention BY sunglasses location

/LMATRIX = “Interaction contrast” sunglasses*location 1 -1/3 -1/3 – 1/3 -1 1/3 1/3 1/3 intercept 0

/DESIGN= sunglasses*location.

The result of the analysis is that the contrast estimate equals 1.0, 95% CI [-0.33, 2.33]. If we standardize this with the within condition variance (the condition being the combination of the levels of the two factors), we get 0.82, 95% CI [-0.27, 1.90].

So, it appears that the difference between the means of the first row and that of the other rows is on average 1.0 points larger in the condition without sunglasses than in the condition with sunglasses. This corresponds to a large difference (dwith = .82). However, the CI also contains negative population difference (albeit that they are smallish), so even though the results are promising for the theory (eyecontact), these negative effects will not persuade a critical reviewer of the study. Indeed, these negative effects contradict the substantive hypothesis.

Again, the confidence interval is so wide, that effects ranging from small negative effects to huge positive effects are considered plausible. Since the results are promising for the theory, a replication study with more precision may be needed to persuade the critics. Let’s plan for a precision of f = .25 with 95% assurance.

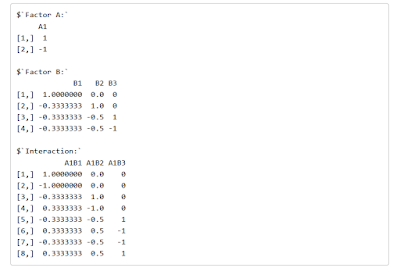

I’ve used the app: https://gmulder.shinyapps.io/PlanningFactorialContrasts/ specifying that we have a factorial design with a = 2 levels and b = 4 levels. The result is that for the interaction contrast with f = .25 and assurance = .95, we need 175 participants per combination of the two factors. This means, that a total of N = 1400 must be recruited.

I’ve taken this from the following output.

|

| Figure 1: Output of sample size planning |

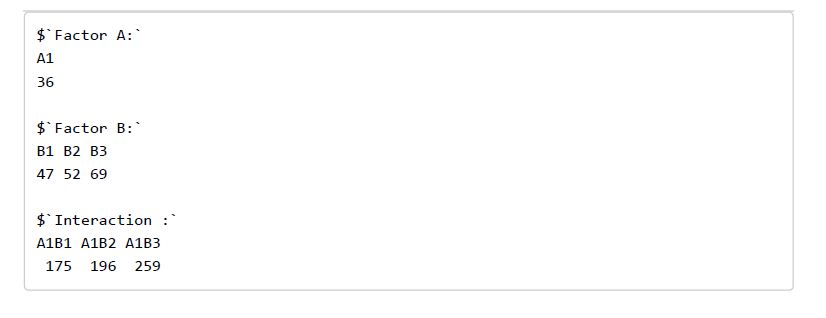

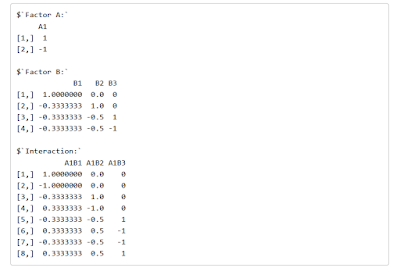

I’ve looked at the “Contrast Summary Tab” to check that interaction A1B1 is the correct one (see Figure 2).

|

| Figure 2. Summary of contrast weights. |

What’s important in the above figure is that the set of weights for A1B1 matches the set of weights used to get the contrast estimate in SPSS (In the LMATRIX-subcommand), so that’s how we know that A1B1 is the contrast we want. (Note: if you switch the number of levels in the app, that is, use 4 levels for A and 2 for B, the interaction weights will match perfectly).

Reference

Haans, Antal (2018). Contrast Analysis: A Tutorial. Practical Assessment, Research, & Education, 23(9). Available online: http://pareonline.net/getvn.asp?v=23&n=9

![Rendered by QuickLaTeX.com \[\hat{MOE}\sim t_{.975}(N-2)\sqrt{\frac{\sigma_{y}^{2}(1-\rho^{2})F(N-2,N-1)}{(N-1)\sigma_{X}^{2}}}. \]](https://small-s.science/wp-content/ql-cache/quicklatex.com-f0b396b6b94f61a97099bec31546363b_l3.png)